Note: The research for this post was done on a Beta client and technical details are subject to change.

As indicated in previous posts, we’ve been using Docker on Windows with Hyper-V for a while. Hearing that the new Docker client for Windows would be Alpine-based and focused on Hyper-V made us eager to see for ourselves.

Hyper-V configuration

The first issue to overcome when using Hyper-V on Windows is the lack of DNS/DHCP & NAT services. The new Docker client handles the Virtual Switch NAT configuration for us and adds a clever solution for DNS & DHCP.

As part of the installation process a DockerNAT Internal Virtual Switch is created and the Virtual Interface on the Windows host for this switch gets a static IP:

New-VMSwitch -Name "DockerNAT" -SwitchType Internal

Get-NetAdapter "vEthernet (DockerNAT)" | New-NetIPAddress -AddressFamily IPv4 `

-IPAddress "10.0.75.1" -PrefixLength 24

A NAT object is created to handle Network Address Translation for the “10.0.75.0/24” subnet:

New-NetNat –Name $SwitchName `

–InternalIPInterfaceAddressPrefix "10.0.75.0/24"

Tip: If any of these steps failed, ensure a Switch with the name “DockerNAT” was created, the IP was assigned to the Virtual Interface and that Get-NetNat lists a NAT with the correct subnet. Also ensure these hard-coded subnets do not overlap with already existing interfaces (We had to manually fix these things while testing the beta).

Next, the MobyLinuxVM Virtual Machine is created in Hyper-V. MobyLinuxVM uses an Alpine bootcd which has Hyper-V Integration Services, such as the Key-Value Pair Exchange service (hv_kvp_daemon). The Hyper-V KVP Daemon allows communication between the Hyper-V Host and the Linux Guest (i.e to retrieve the Guest IP and send two-way messages as we’ll see later).

Finally, Docker bundles a com.docker.proxy.exe binary which proxies the ports from the MobyLinuxVM on your windows host. At the time of writing (Docker Beta 7), this includes the DNS (port 53 TPC/UDP), DHCP (port 67 UDP) and Docker daemon (port 2375 TCP).

If you’ve been running alternative solutions for your Hyper-V set-up, you need to ensure the above ports are available as follows..

See if any process is using port 53:

netstat -aon | findstr :53

Once you have the process id (<pid>) of the process holding the port, get the name:

tasklist /SVC | findstr <pid>

The com.docker.proxy.exe will proxy all DNS requests from the internal network of your Windows laptop to the DNS server used by your Windows host, effectively isolating the MobyLinuxVM from the network configuration changes as you move your laptop around.

To ensure this process can work properly, docker automatically creates DockerTcp & DockerUdp firewall rules and removes them when you close the client.

New-NetFirewallRule -Name "DockerTcp" -DisplayName "DockerTcp" `

-Program "C:\<path>\<to>\com.docker.proxy.exe" -Protocol TCP `

-Profile Any -EdgeTraversalPolicy DeferToUser -Enabled True

New-NetFirewallRule -Name "DockerUdp" -DisplayName "DockerUdp" `

-Program "C:\<path>\<to>\com.docker.proxy.exe" -Protocol UDP `

-Profile Any -EdgeTraversalPolicy DeferToUser -Enabled True

Having the Docker daemon port opened locally, allows your docker client to talk to localhost, however - it seems that going forward a named pipe solution will be used instead, if the VM was created successfully you should see the named pipe connected to its COM port:

Get-VMComPort -VMName MobyLinuxVM | fl | Out-String

See also the docker client code for handling Windows Named Pipes going forward.

If the MobyLinuxVM booted successfully, we may confirm the Hyper-V integration Services are running:

Get-VMIntegrationService -VMName MobyLinuxVM -Name "Key-Value Pair Exchange"

And that the com.docker.proxy.exe DHCP service provided an IP to the VM - which we can query thanks to the Hyper-V Integration Services:

$(Get-VM MobyLinuxVM).NetworkAdapters[0]

Troubleshooting

All settings are stored under %APPDATA%\Docker\ folder, this folder is replicated across machines in an Enterprise setting where Roaming is enabled.

All logs are stored under %LOCALAPPDATA%\Docker\ folder.

To monitor the latest logs use the following PowerShell command:

gc $(gi $env:LocalAppData\Docker\* | sort LastAccessTime -Desc | select -First 1) -Wait

This will auto-refresh for every event written to the log.

Docker ToolBox Migration

Switching on the Hyper-V role on Windows will disable VirtualBox (and you won’t be able to use the Docker ToolBox until you switch Hyper-V off & reboot). If a Docker Toolbox installation is detected, a migration path is offered (using qemu-img). This will convert the %USERPROFILE%\.docker\machine\machines\<machine-name>\disk.vmdk to vhdx:

qemu-img.exe convert <path-to-vmdk> -O vhdx -o subformat=dynamic -p "C:\Users\Public\Documents\Hyper-V\Virtual hard disks\MobyLinuxVM.vhdx\"

If you were already using Docker-Machine & Docker-Compose with Hyper-V, you can continue to do so side-by-side with the Docker for Windows client.

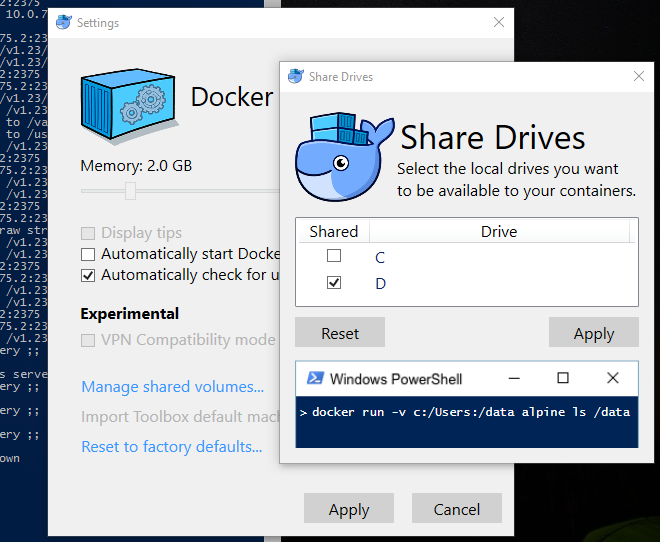

Mounting Volumes

One of the big improvements the new Docker for Windows promises is how Volume mounts will be handled.

A handy dialog is provided to streamline everything for us.

The current implementation will share the whole drive (and not individual folders). Enabling a Drive share will prompt for credentials.

Credentials are stored with the target “Docker Host Filesystem Access” under the Windows > Control Panel > Credential Manager > Windows Credentials Store by the configuration tool. This uses System.Security.Cryptography to encrypt the credentials with currentuser scope.

If the credential manager already contains credentials for the specified target, they will be overwritten.

Next, the drive is shared on the Windows Host:

net share C=C:\ /grant:<username>,FULL /CACHE:None

This Samba share now needs to be mounted into the MobyLinuxVM and this is automated through the Hyper-V Key-Value Pair Exchange Integration Service. A detailed explanation is available here.

The way this is supposed to work is as follows: our Windows host puts a mount authentication token packaged in a KvpExchangeDataItem on the VMBus:

class Msvm_KvpExchangeDataItem : CIM_ManagedElement

{

uint16 Source = 0;

string Name = "cifsmount";

string Data = "authToken";

};

The Authentication token is a serialized string containing the mount points and mount options:

/c;/C;username=<username>,password=<password>,noperm

On the Alpine guest the hv_utils kernel driver module notifies the hv_kvp_daemon. This daemon writes the kvp to the pool file (/var/lib/hyperv/.kv_pool_*).

At this stage a process on MobyLinuxVM needs to make the directory and mount the share from the host - but this was failing at the time of writing:

#for both upper and lower case

mount -t cifs //10.0.75.1/C /C -o username=<username>,password=<password>,noperm

If the share worked fine, our docker client would be sending any volume mount commands through the socket opened by the com.docker.proxy.exe, this proxy re-writes the path if needed: C:\Users\test\ becomes /C/Users/test, allowing us to mount Windows folders into our Docker containers.

However, there are still limitations due to the SMB protocol (lack of support for inotify and symlinks), which will cause problems with live reloads.

Troubleshooting: We can verify that the auth token exists on the VMBus with the following PowerShell script:

$VmMgmt = Get-WmiObject -Namespace root\virtualization\v2 -Class `

Msvm_VirtualSystemManagementService

$vm = Get-WmiObject -Namespace root\virtualization\v2 -Class `

Msvm_ComputerSystem -Filter {ElementName='MobyLinuxVM'}

($vm.GetRelated("Msvm_KvpExchangeComponent")[0] `

).GetRelated("Msvm_KvpExchangeComponentSettingData").HostExchangeItems | % { `

$GuestExchangeItemXml = ([XML]$_).SelectSingleNode(`

"/INSTANCE/PROPERTY[@NAME='Name']/VALUE[child::text() = 'cifsmount']")

if ($GuestExchangeItemXml -ne $null)

{

$GuestExchangeItemXml.SelectSingleNode(`

"/INSTANCE/PROPERTY[@NAME='Data']/VALUE/child::text()").Value

}

}

So far, I have not been able to find which process is monitoring the /var/lib/hyperv/.kv_pool_0 files on the Alpine guest.

Private Registries

At the moment, the beta for Windows does not support DOCKER_OPTS or TLS certs yet.

We can get root access to the MobyLinuxVM as follows:

#get a privileged container with access to Docker daemon

docker run --privileged -it --rm -v /var/run/docker.sock:/var/run/docker.sock -v /usr/bin/docker:/usr/bin/docker alpine sh

#run a container with full root access to MobyLinuxVM and no seccomp profile (so you can mount stuff)

docker run --net=host --ipc=host --uts=host --pid=host -it --security-opt=seccomp=unconfined --privileged --rm -v /:/host alpine /bin/sh

#switch to host FS

chroot /host

Poking around in the VM reveals the following:

The VM (Alpine-based) uses OpenRC as its init system.

rc-status

Shows the status of all services, but some of the init scripts do not implement status and show up as “crashed” while it seems their process is still running (ps -a).

The Docker init script relies on a /usr/bin/mobyconfig script. This mobyconfig script requires the kernel to boot with a com.docker.database label specifying the location of the config file or it bails. If the label is present - /Database is mounted using the Plan 9 Filesystem Protocol, which was the original filesystem for Docker for Mac.

The mobyconfig script is able to retrieve network and insecure-registry configuration for the Docker deamon or pick up a config file from /etc/docker/daemon.json. This looks like a very promising solution, once it is fully implemented.

As the whole disk is a Temporary filesystem with only the /var/ mountpoint (to /dev/sda2) persisted, changes made to any of the scripts are not persisted across reboots. It is possible to temporarily change the Docker options and /etc/init.d/docker restart the daemon.

Conclusion

Many improvements are coming with the Docker client for Windows, we are looking forward at testing the Docker client for Mac next.